One of the technical bottlenecks of Augmented Reality (AR) Near-Eye Display (NED) devices is the optical combiner, which integrates the virtual and real worlds.

Among the numerous existing technological approaches, diffractive optical waveguides are favored by many leading companies due to their advantages such as wide eyebox, compact size, and good scalability. However, diffractive optical waveguides also face many technical bottlenecks, such as poor color uniformity and low energy efficiency.

The principle of diffractive optical waveguides

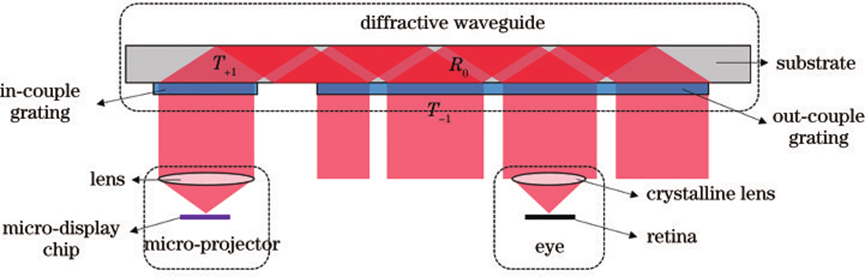

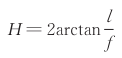

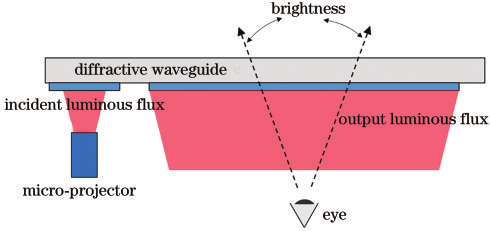

The principle of the near-eye display system based on diffractive optical waveguides is illustrated in Figure 1. This system consists of three parts: a micro-projector, diffractive optical waveguides, and the human eye.

Figure1: Diffractive Optical Waveguide Principle Diagram

The micro-projector is used to generate virtual images and consists of a micro-display chip and an imaging lens group. The micro-display chip is located at the back focal plane of the imaging lens group. Light emitted from any point on the micro-display chip is transformed into a collimated beam after passing through the imaging lens group, which then illuminates the diffractive optical waveguide. In other words, the micro-projector images the virtual image at infinity.

The diffractive optical waveguide is used to "transport" virtual images to the user's eyes and simultaneously serves to replicate the pupil. It consists of a piece of glass with excellent parallelism (i.e., the waveguide) and multiple diffractive gratings fabricated on the glass. Figure 1 illustrates two exemplary gratings, referred to as the input grating and the output grating. When collimated light emitted from the micro-projector reaches the input grating, it is diffracted by the grating. The diffraction order T+1 of the grating satisfies the total internal reflection condition within the glass substrate, leading to total internal reflection propagation within the glass until it encounters the output grating. The transmission order T-1 of the output grating is coupled out of the glass, while the reflection order R0 of the output grating continues to propagate via total internal reflection. This process repeats continuously, with the beam encountering the output grating multiple times, and each encounter results in a portion of the light being coupled out. This mechanism effectively replicates the pupil.

The collimated light coupled out of the waveguide is focused by the crystalline lens onto the retina, where it forms an image that is perceived by the human eye. Meanwhile, images from the real world can directly enter the eye through the flat glass substrate. Thus, the human eye can simultaneously perceive both virtual images and the real world.

Based on the aforementioned principle, one characteristic of diffractive optical waveguides is the ability to replicate incident light beams, allowing the human eye to perceive complete virtual images over a wide range. Therefore, diffractive optical waveguides possess the advantage of a large eyebox.

In addition, the thickness of the diffraction light waveguide is only determined by the flat glass, so it is light and thin enough to fully satisfy consumers' user experience.

In terms of mass production, diffractive optical waveguides can ensure sufficient production capacity through nanoimprint technology and can well control costs.

These advantages make diffractive optical waveguides a mainstream augmented reality near-eye display solution.

The key parameters and measurement methods of diffractive optical waveguides

field of view

According to the principle of diffractive optical waveguides, a point on the virtual image is transformed by the micro-projector system into collimated light in a specific direction. This collimated light propagates through the diffractive optical waveguide, expands, and then converges onto a point on the retina through the crystalline lens. From this process, it is evident that a point observed by the human eye on the virtual image corresponds to collimated light propagating in a specific direction in space. The angular range of collimated light determines the size of the image seen by the human eye. Therefore, the field of view (FOV) is typically used to measure the size of the virtual image displayed by the diffractive optical waveguide. A larger FOV results in a larger virtual image displayed.

A larger field of view (FOV) can provide richer information and create a more immersive experience. Therefore, increasing the FOV is a goal tirelessly pursued by researchers.

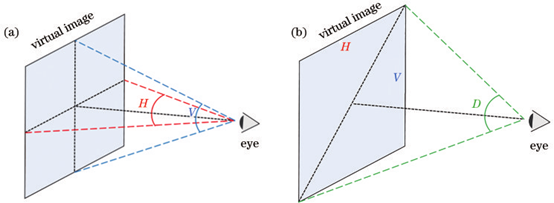

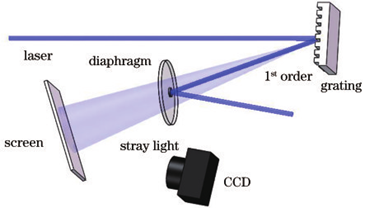

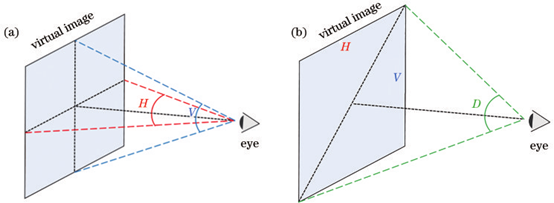

Figure2: Schematic diagram of the definition of field of view angle

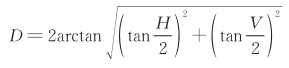

The virtual image range presented by a diffractive optical waveguide is usually rectangular, so at least two parameters are required to determine the size of the field of view. There are two commonly used methods to express the field of view: one method is to give the horizontal field of view H and the longitudinal field of view V, as shown in Figure 2(a); the other method is to give the horizontal and vertical field of view angle The angle ratio a (a=tanH/2:tanV/2) and the diagonal field of view D are shown in Figure 2(b).

According to simple geometric relationships, the conversion relationship between these two representation methods is

It should be emphasized that two parameters must be used to completely determine the field of view range, but many researchers often only focus on the diagonal field of view D and ignore the ratio of the horizontal and vertical field of view a. In fact, for diffractive optical waveguides, even if the diagonal field of view angle is 40°, the design difficulties caused by different ones are completely different.

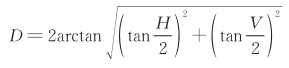

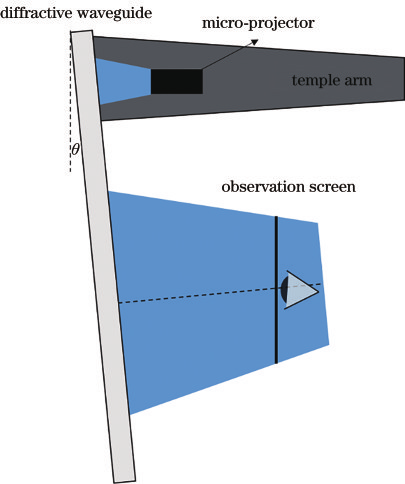

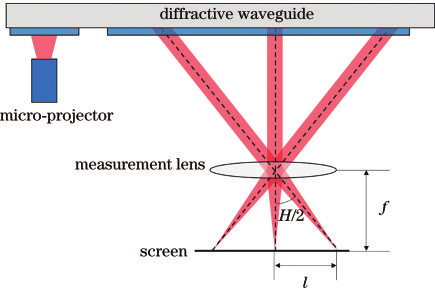

The measurement method of the field of view angle is shown in Figure 3. Place a measurement lens set with correction error at the position where the human eye observes. Parallel light with different field of view angles converges at different points on the receiving screen. By measuring the position on the receiving screen, The size of the field of view can be calculated from the size of the image.

Figure3: Schematic diagram of measurement method of field of view angle

In Figure 3, the focal length of the measuring lens group is f, the half-width of the image formed is l, and the corresponding horizontal field of view is

It should be emphasized that what is measured according to Figure 3 is the actual field of view. However, in addition to being related to the actual field of view, the size of the virtual image perceived by the human eye is also affected by image clarity, uniformity, It is affected by many factors such as the eye movement range of the module. For example, for two near-eye display modules with the same real field of view, the module with a larger eye movement range will give the user the illusion of a "larger" field of view. However, currently these factors have little impact on the field of view. There are no quantitative studies. In addition, the degree of occlusion of the external environment by the module will also affect the user's judgment of the field of view.

Therefore, when designing a diffraction optical waveguide near-eye display module, we should not simply pursue the single parameter of field of view, but should ensure a balance between key parameters to avoid that the field of view perceived by the human eye does not reach Design expectations.

Eye movement range

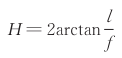

The eye movement range is one of the important indicators of the near-eye display module. It refers to the three-dimensional area between the display module and the human eye, in which the human eye can observe virtual images that meet certain imaging standards.

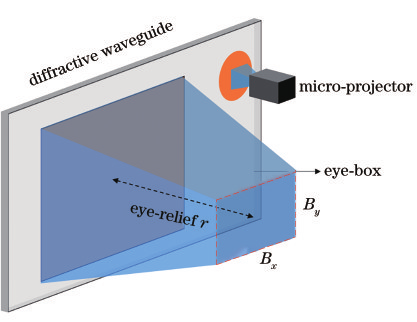

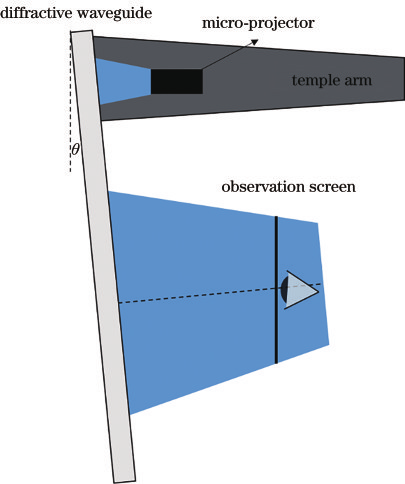

Figure 4 Schematic diagram of the eye movement range of diffracted light waveguides

Figure 4 shows the eye movement range of the diffracted optical waveguide. The parallel beams emitted by the micro projector in different directions form a light prism, and the boundary of this light prism is determined by the field angle of the micro projector and the size of the exit pupil. When the light beam is coupled out by the diffracted optical waveguide, the area where all the coupled out light beams overlap in space will also form an optical prism. Only when the human eye is located within this optical prism can it receive light from all viewing angles. Otherwise, certain angle information will be lost, so the area within this optical prism is the theoretical eye movement range of the diffracted optical waveguide.

It should be emphasized that the eye movement range is a three-dimensional area, but due to the symmetry of the module, many near-eye display modules only use the eye-suitable distance r and the size of the human eye observation area Bx×By at the eye-suitable distance to describe It is shown in the dotted box area in Figure 4.

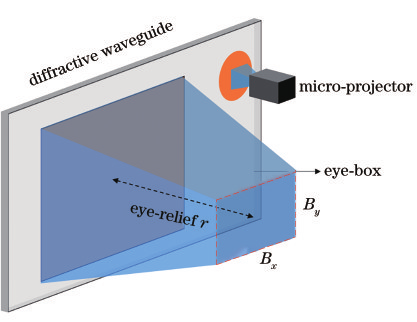

Figure5: Schematic diagram of the eye movement range of a near-eye display module with a certain included angle

However, due to higher requirements for module comfort, diffractive optical waveguides often have more complex postures. As shown in Figure 5, the micro projector is assembled in the spectacle leg bracket. In order to be comfortable to wear, there is a certain angle θ between the spectacle lens and the vertical direction. In this case, if only the eye-friendly distance and the human eye observation area are used Size alone cannot fully describe the range of eye movements, and the relative posture of the observation plane and the diffractive light guide also needs to be clearly observed, which is often overlooked.

In addition, the concept of eye movement range has a certain degree of ambiguity. There is currently no standard definition. The biggest controversy is how to determine the boundary of the eye movement range. In particular, the imaging uniformity of the diffractive optical waveguide is not good. In this case, how to determine the boundary of the eye movement range? Reasonably determining the imaging standards required for human eye observation is even more worthy of discussion.

The usual way to determine the boundary of the eye movement range is to determine a certain parameter A (such as brightness or uniformity index) as an evaluation index, measure the value of parameter A within a certain range Bx0×By0 at the determined eye-friendly distance r0, and record the range. The maximum measured value of the internal parameter A is Amax; set a certain threshold β, and when the parameter A of the virtual image changes to βAmax, it is determined that this position is the boundary of the eye movement range at the eye-friendly distance r0; if you want to determine the eye movement in a three-dimensional area For the range boundary, it is necessary to measure the value of parameter A at different eye-friendly distances, and use the same judgment standard to determine the boundary of the eye movement range.

It should be noted that the choice of evaluation parameter A will affect the size of the eye movement range.

Parameter A is the brightness of the virtual image. This criterion is simple and intuitive, and is suitable for use occasions with relatively high brightness requirements, such as outdoor information prompts.

Parameter A is the uniformity index. This method is relatively complex to calculate and is more suitable for occasions that require higher image quality, such as leisure and entertainment;

Parameter A is the modulation transfer function (MTF), but this parameter is more suitable for geometric optical near-eye display solutions based on catadioptric reflection, because the principle of diffraction light waveguide determines that the MTF at different positions will not be significantly different.

In fact, the various parameter indicators of the diffractive optical waveguide change slowly at different observation positions, and there is no clear boundary in the area where the human eye can see the complete virtual image. Therefore, it is necessary to clearly evaluate the eye movement range when measuring the size of the eye movement range. standards, and reasonable evaluation parameters must be determined based on the user's usage scenarios.

Brightness, brightness uniformity and energy utilization

The brightness of the augmented reality near-eye display module is a very important indicator. It determines the brightness and darkness of the virtual image seen by the human eye. Insufficient brightness will make the module unable to be used under outdoor strong light conditions, so the brightness of the diffraction light waveguide is increased. is very important.

In addition, the imaging brightness of the diffraction light waveguide is not uniform, but a distribution that changes with the angle. Therefore, the uniformity of the imaging brightness is also an important indicator, which determines the comfort of the image observed by the human eye and affects the human eye. Indicators such as field of view angle and eye movement range perceived by the eye.

In addition to brightness, near-eye display modules also need to focus on energy utilization. Improving energy utilization can reduce module power consumption and extend battery life.

Brightness, brightness uniformity and energy utilization are interrelated.

According to the principle of photometry, brightness L is generally used to evaluate the brightness or darkness of an object seen, that is, the luminous flux per unit area and unit solid angle in a given direction. The unit is cd/m2, also known as nits.

Generally, micro-projection systems use luminous flux Φ to describe the total light energy they provide, and the unit is lm.

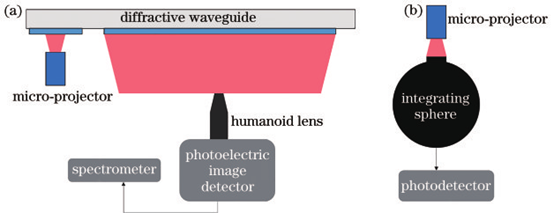

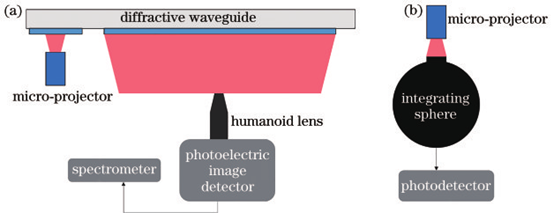

Figure6: Schematic diagram of the measurement method of diffracted light waveguide brightness, chromaticity and microprojector flux

The measurement method of incident light brightness and luminous flux is shown in Figure 6.

The light path for measuring the brightness entering the eye is shown in Figure 6(a). A luminance meter is placed at an eye-friendly distance. The luminance meter consists of a human-eye lens, a photodetector and a spectrometer. A human eye-simulating lens is an imaging lens with an aperture located on the surface of the lens. Its optical performance is similar to that of the human eye and can simulate the visual characteristics of the human eye. The photodetector can receive the image formed by the lens and input the light of a certain central field of view into the spectrometer. According to the imaging results of the spectrometer and the photodetector, the brightness and chromaticity information of the entire field of view can be obtained.

The optical path of measuring the micro-projector is shown in Figure 6(b). The light emitted by the micro-projector is received by the integrating sphere, and the luminous flux can be obtained after processing by the photodetector.

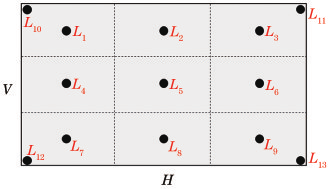

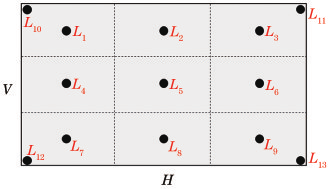

Figure7: Schematic diagram of the method for evaluating the eye brightness of diffracted optical waveguides

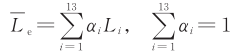

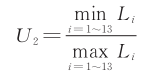

As shown in Figure 7, the virtual image observed by the human eye is divided into 9 sub-regions on average (it can also be divided into more sub-regions if the brightness changes drastically), and the center point brightness L1 ~ L9 of each region is measured. Then the average brightness of the virtual image can be defined as the average value of the brightness of these 9 points. In order to more fully reflect the brightness at the edge of the field of view, you can also measure the brightness of the four vertices of the field of view, L10~L13, and use the brightness of the 13 points L1~L13 to measure the average brightness.

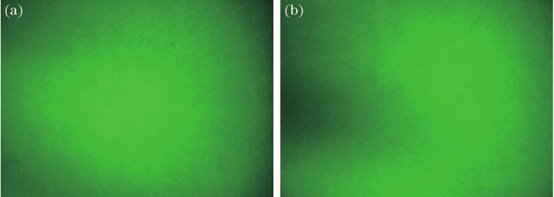

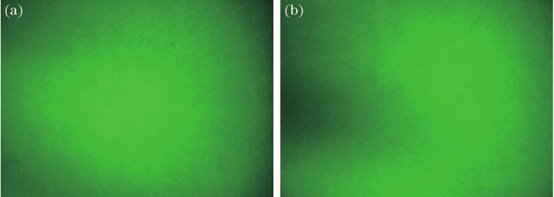

Figure8: The impact of different brightness distributions on the human eye

However, the evaluation of brightness should also fully consider the human eye characteristics and usage scenarios. As shown in Figure 8, the average brightness of the two pure green pictures at 9 points is basically the same. The difference is that in Figure 8(a), the center brightness of the field of view is high and the edge brightness is high. Low, while Figure 8(b) shows an obvious dark area on the left side of the field of view. The human eye will feel that Figure 8(a) is brighter than Figure 8(b). This is because the human eye is more sensitive to the brightness information of the central field of view, and it is easier to perceive a picture with a brighter central field of view as having a higher average brightness. Therefore, the characteristics of the human eye should be fully considered when evaluating brightness. For example, different weights should be given to the brightness of different points. The weight of the center point is higher than that of the edge points, so as to obtain a brightness measurement value that conforms to the perception of the human eye.

The expression for the brightness measurement value is

Regarding brightness uniformity, you can divide the standard deviation of L1~L13 by the average valueLAs a measure, that is

The reason why the above formula is divided by the average value is to eliminate the impact of the absolute value of brightness on uniformity. The closer U1 is to 0, the better the brightness uniformity. Although U1 makes full use of the information of each measurement point, it is not intuitive. Another more direct and simple evaluation method is to use the minimum value to the maximum value in L1~L13 as the evaluation index, that is

The value range of U2 is [0, 1]. The closer to 1, the better the uniformity. U2 has a clear value range, and its physical meaning is easier to understand than U1.

Figure9:Schematic diagram of light energy utilization evaluation index of diffraction optical waveguide

Regarding the energy utilization efficiency, researchers usually use the ratio of the eye brightness L to the incident flux Φ of the microprojector to evaluate the light energy utilization efficiency of the diffractive optical waveguide. The unit is cd·m−2 ·lm−1. Figure 9 shows the evaluation method of energy utilization. The advantage of using this unit is that it can quickly obtain eye-catching brightness, allowing engineering and technical personnel to quickly determine whether it can be used in a suitable scene. For example, the energy utilization rate of a diffractive optical waveguide is 100 cd·m−2 ·lm−1. When paired with a 10lm micro-projector, the maximum eye-level brightness is 1000 cd/m2. This brightness can meet most indoor usage scenarios. However, it will be slightly insufficient when used outdoors under strong light.

If you want to evaluate the energy utilization of the entire module, you need to measure the luminous efficiency γ of the micro-projector, which is the luminous flux generated per unit power consumption, in lm/W. Then the energy utilization of the module is ηM = γηD.

It should be noted that when evaluating energy utilization based on eye brightness, energy at other locations within the eye movement range is ignored. In fact, because the eye movement range of the diffraction light waveguide is large, the energy is dispersed within the eye movement range, so the light at many locations does not enter the human eye, such as the light outside the dotted line in Figure 9, which results in the diffracted light obtained by the above measurement method. Waveguide energy utilization is not high. Therefore, when selecting a near-eye display device, it is necessary to weigh the eye movement range and energy utilization; when evaluating the module, the energy utilization under what eye movement range conditions should also be given.

Chroma Uniformity

The brightness uniformity of diffraction light waveguides usually needs to be improved. When displaying a white image, the brightness distribution of different colors may be completely different, which will cause obvious chromaticity unevenness in the image, that is, the image observed by the human eye has More obvious color difference.

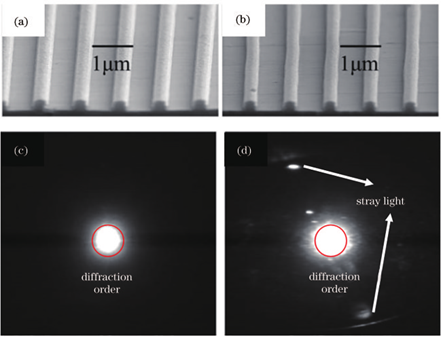

According to the principle of colorimetry, any color can be described using the coordinates of a point in the color space. It is recommended to use the CIE1976 L* u * v * uniform color space to describe the color of a certain point. The advantage of this color space is that the color space The distance between the two points can reflect the difference in perception of the two colors by the human eye. That is, the farther the distance between the two colors in the color space, the greater the color difference perceived by the human eye.

The measurement method of chroma is the same as that of brightness, as shown in Figure 6(a).

Similar to evaluating brightness, the virtual image seen by the human eye can be divided into N small areas, and the chromaticity value of the center point in each small area is (ui ', vi '), then the measurement index of chromaticity uniformity can be defined for

That is, the furthest distance of all measurement points on the chromaticity diagram is used to measure chromaticity non-uniformity, as shown in Figure 10.

Figure10:Schematic diagram of the chromaticity non-uniformity measurement method of diffracted light waveguides

Figure 10 shows the positions of 13 points on the virtual image in the color space (the positions of these 13 points on the virtual image are shown in Figure 7). Among them, the two furthest points (marked in red) The distance between is the measure of chromaticity uniformity Δu'v'.

Due to the dispersion characteristics of gratings, diffracted optical waveguides are prone to chromaticity unevenness. But once the grating is made, the chromaticity non-uniformity is certain and can therefore be compensated for through calibration and software correction.

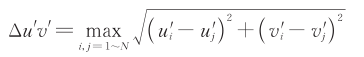

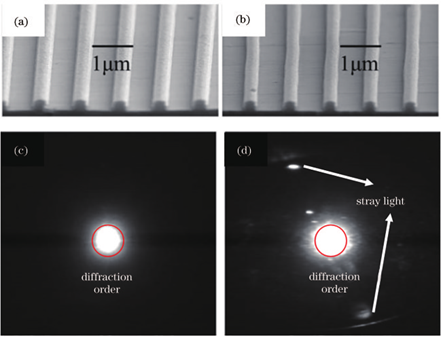

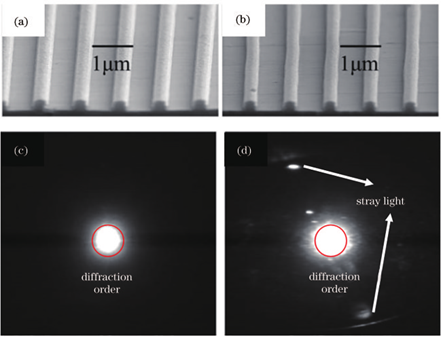

Grating stray light and contrast

For diffractive optical waveguides, the stray light level of the grating is an important but often overlooked parameter.

The so-called stray light of grating refers to the stray light that exists in directions other than the grating diffraction direction (referring to the direction determined by the grating equation). It mainly comes from high-frequency errors of the grating such as surface roughness and grating line bending.

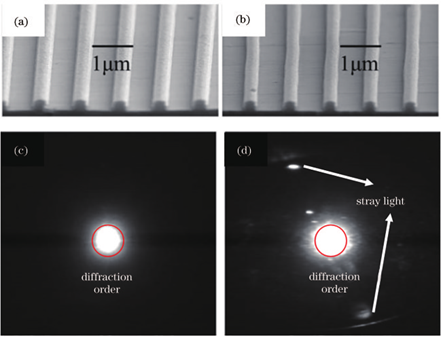

Figure11:Schematic diagram of diffraction grating line bending and stray light

Figure 11 compares the microscopic morphology of different gratings and the stray light caused by them. Figure 11(a) and Figure 11(c) are ideal grating groove shapes and corresponding diffraction spots. The grating lines shown in Figure 11(a) have straight lines and low surface roughness, so the energy of the diffraction spots is concentrated. There is no stray light in the non-diffraction order direction. The grating shown in Figure 11(b) has obvious grating line bending, so the energy of the corresponding diffraction light spot is dispersed, and there is a large amount of stray light in the non-diffraction order direction.

The stray light of the grating will make the originally dark areas brighter, that is, there will be a larger background noise, causing the image contrast (the ratio of the brightest to the darkest brightness) to decrease.

Figure12:Schematic diagram of imaging effects under different contrast ratios

Figure 12(a) and Figure 12(c), Figure 12(b) and Figure 12(d) are the imaging effects of low contrast and high contrast respectively. It can be seen that reduced contrast will make the observer feel that the image details are unclear. The color is not bright enough, which reduces the user experience.

Therefore, attention should be paid to the stray light level of the grating, which is of great significance to improving the process level to enhance the contrast of the diffractive optical waveguide.

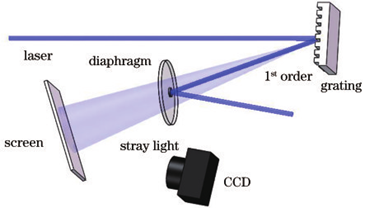

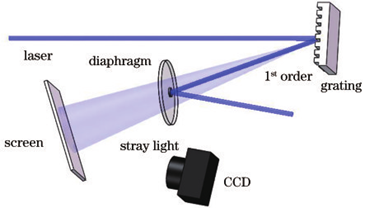

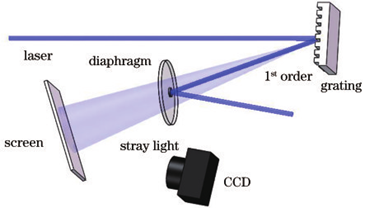

Figure13:Measurement method of grating stray light

The measurement method of grating stray light is shown in Figure 13. A laser beam is incident on the grating to be measured, passes through a special light-transmitting sheet and reaches the white paper screen. The central area of the light-transmitting sheet is coated with an opaque metal film. This area is just large enough to block the transmission order without affecting the transmission of other stray light. Use CCD to record the gray value of the image within a certain range on the white paper screen. When the parameters of the CCD are fixed, the gray value can reflect the stray light level of the grating.

The contrast measurement method of the diffraction light waveguide is to use a 4×4 black and white checkerboard, as shown in Figure 12(a), using the average brightness Lw of all white area points and the average brightness Lb of all black area points. The ratio C=Lw/Lb measures contrast.

Reference: "Key Parameters of Diffracted Light Waveguide Augmented Reality Near-Eye Display"_Progress in Lasers and Optoelectronics》

原文始发于微信公众号(小小光08):The key parameters and measurement methods of diffractive optical waveguides